In the fast-changing world of robotics and autonomous systems, one technology stands out as a game-changer: SLAM. Imagine a robot exploring an unfamiliar environment, dynamically building a map while pinpointing its location within it. This sophisticated dance of perception and mapping is not just a futuristic fantasy but a reality transforming industries from automotive to healthcare. Let’s dive into the fascinating world of 3D SLAM and unravel the complexities behind this groundbreaking technology.

SLAM stands for Simultaneous Localization and Mapping technology. Initially used in military applications to help drones and robots execute tasks in complex environments, SLAM has gradually entered civilian use. Today, 3D SLAM is utilized in various applications such as household vacuum robots and autonomous vehicles, significantly enhancing the intelligence of these devices.

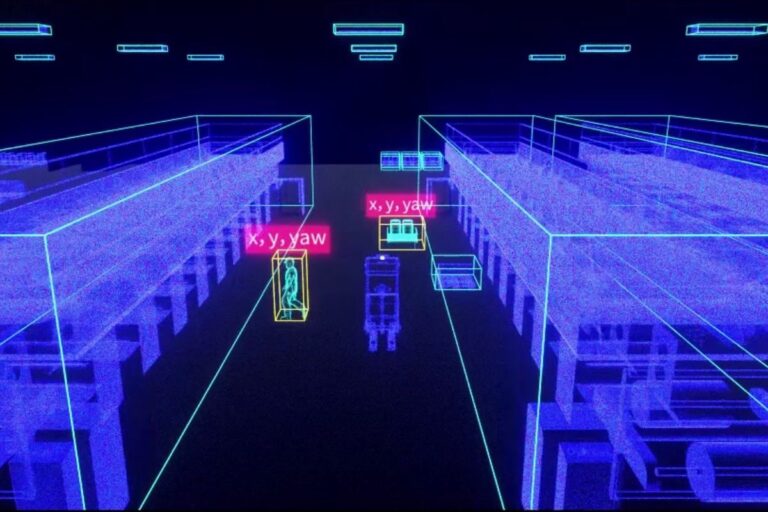

SLAM enables robots to self-localize and construct maps in an unknown environment simultaneously. Specifically, SLAM uses sensors such as depth cameras or LiDAR to collect environmental data, which uses algorithms to estimate the device’s location while constructing a map of the surroundings. This technology fixes the problem of how to map the environment without knowing the area and vice versa. SLAM is widely applied in fields like robot navigation, autonomous driving, and augmented reality (AR), allowing devices to navigate complex environments without predefined maps.

3D SLAM systems typically comprise two main modules: the Front End and the Back End. The Front End handles initial data processing, including feature extraction, motion estimation, and data association, while the Back End optimizes these estimates to improve the system’s accuracy and robustness.

Depending on the sensors used, SLAM can be categorized into visual SLAM, LiDAR SLAM, and Inertial Measurement Unit (IMU) SLAM. Visual SLAM uses cameras to capture image information, while LiDAR SLAM utilizes laser scanners to detect environmental structures, and IMU SLAM employs inertial sensors for self-localization.

Environment Awareness

By constructing environmental maps, SLAM systems can identify obstacles, enabling effective obstacle avoidance.

High Adaptability

SLAM systems adapt to varying lighting conditions, dynamic environment changes, and diverse terrains, providing stable navigation capabilities.

Autonomous Navigation

SLAM technology allows robots to move autonomously in environments without external navigation aids, which is crucial for exploring unknown areas or navigating indoors without GPS signals.

Path Planning

Accurate environmental maps help robots or vehicles plan efficient paths, selecting optimal or safe routes.

In indoor or underground environments without GPS signals, SLAM navigation technology is important. Robots analyze sensor data to identify environmental features and use this information to estimate their position. For example, visual SLAM systems use 2D or 3D cameras to capture environment images as well as depth data and then employ feature matching and motion estimation algorithms to track robot movement and build environmental maps. LiDAR SLAM systems sense environmental structures by emitting laser beams and analyzing returned signals.

SLAM is crucial in autonomous vehicles for accurate environmental perception and positioning, enabling stable navigation in diverse environments such as city streets and highways by integrating data from cameras and LiDAR sensors.

In the industrial field, mobile robots utilize SLAM technology for autonomous navigation, performing tasks such as transportation, cleaning, and monitoring in indoor environments like warehouses, hospitals, and shopping malls.

For instance, the MRDVS Ceiling Vision SLAM solution offers industry-leading precision and collaboration capabilities among robots. This solution only requires a robot to walk a path once, with an onboard 3D camera automatically scanning the upper environment, and AI algorithms will quickly do the mapping, providing reliable navigation for mobile robots. This technology addresses the limitations of 2D LiDAR in complex and changing environments.

MRDVS CV SLAM navigation

Household vacuum robots use SLAM to locate themselves and construct indoor environment maps. This enables the robot to understand its position in the room and the layout of the surroundings. Based on SLAM-constructed maps, vacuum robots can plan efficient cleaning paths, ensuring coverage of all areas needing cleaning. SLAM navigation combined with sensors allows vacuum robots to identify and avoid obstacles, preventing collisions and achieving smarter navigation.

A photovoltaic company with high personnel and vehicle traffic in its production workshops, requiring high docking accuracy for machines, adopted the MRDVS Ceiling Vision SLAM Scanner solution to replace traditional 2D LiDAR navigation. The company applied the MRDVS SLAM navigation system on top of integrated photovoltaic handling robots, and the system has been operating stably for over a year. Covering 80,000 square meters across two workshops, more than 500 Automated Guided Vehicles (AGVs) equipped with this vision SLAM module have operated without failures or lost positioning issues.

A China-leading new energy company with an open workshop environment, mixed human and vehicle traffic, and component storage varies greatly, adopted the MRDVS 3D SLAM navigation system. This system was installed on large roll-handling AGVs responsible for transporting vehicle engines.

A well-known clothing company has a spacious warehouse featuring over 4,000 storage locations and significant material changes adopted the MRDVS Ceiling Vision SLAM navigation solution. The navigation system was installed on automatic forklift robots, responsible for transporting pallets.

3D SLAM is now crucial in civilian applications like autonomous vehicles and household robots. It enables simultaneous navigation and mapping using depth cameras and LiDAR sensors. SLAM is essential for autonomous navigation in areas without GPS, allowing robots to construct maps, identify obstacles, and plan efficient paths.

The MRDVS 3D SLAM Scanner navigation solution, leveraging exclusive ceiling vision technology, adapts to various factory environments, including buildings with heights ranging from 2 to 12 meters and suitable for narrow passages. The solution constructs deep learning networks for industrial applications, trained with extensive industrial scenario data to provide robust target detection performance in diverse working environments.

MRDVS Ceiling Vision SLAM system can be used on SMT robots for loading and unloading, forklifts, cleaning robots, etc. This system is suitable for a variety of models.

The products in the solution are suitable for factory buildings with a ceiling height of 2-12m. They are also suitable for long narrow passages.

The MRDVS sensor’s accuracy can reach up to 1 cm, with specific precision varying depending on the type of camera used.

MRDVS 3D SLAM cameras use high-resolution RGB-D cameras to capture the features of the ceiling. The MRDVS CV SLAM system uses deep learning and segmentation technology to navigate changing environments accurately. The solution achieves accurate and reliable autonomous localization through high-precision recognition and tracking of key industrial scene targets.

©Copyright 2023 Zhejiang MRDVS Technology Co.,Ltd.

浙ICP备2023021387号-1