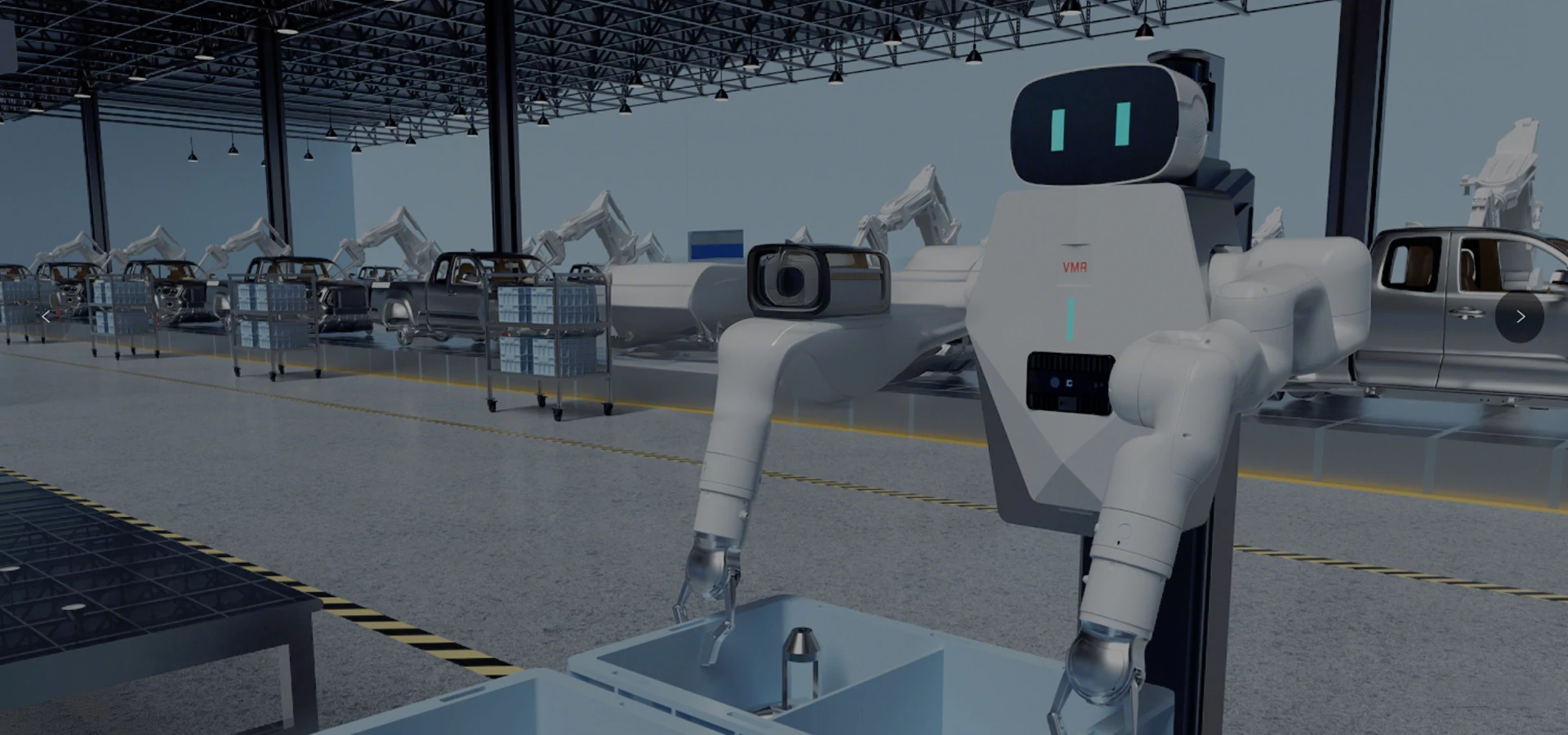

Imagine a robot that can not only see but truly understand the world around it—navigating crowded warehouses, picking fragile objects with precision, or even recognizing human gestures instantly. This is the power of depth sensing cameras, the game-changing technology that’s transforming ordinary robots into smart, aware machines. By capturing detailed

3D information of their environment, these cameras give robots the vision they need to make split-second decisions and operate safely alongside humans. In this article, we’ll dive into how depth sensing cameras are fueling the rise of smarter robotics and reshaping industries across the globe.

Core Technologies Powering Depth Sensing Cameras

Depth sensing cameras rely on innovative technologies to capture accurate three-dimensional information about their surroundings. Understanding these core methods helps explain how robots gain the “eyes” to perceive depth and spatial relationships. Here are the primary technologies behind modern depth sensing cameras:

-

Time-of-Flight (ToF) Cameras

ToF cameras emit infrared light pulses and measure the time it takes for the light to reflect back from objects. This time delay is used to calculate precise distances, creating real-time depth maps. ToF systems come in two main types: direct ToF (dToF), which measures the exact time delay, and indirect ToF (iToF), which infers distance from phase shifts. They excel in providing accurate depth data with high frame rates, making them ideal for dynamic environments like robotics. However, they can face challenges with reflective surfaces and strong ambient light.

-

Structured Light Cameras

These cameras project a known infrared pattern—such as grids or dots—onto the environment. The camera then captures how this pattern deforms over surfaces to infer depth information. Structured light is highly effective in indoor, close-range scenarios, offering detailed depth maps with high resolution. This method is often used in gesture recognition and small object scanning but is less suited for outdoor or large-scale environments due to sunlight interference.

-

Stereo Vision Systems

Stereo vision uses two or more cameras spaced apart to mimic human binocular vision. By comparing differences (disparity) between images captured by each camera, it calculates depth information. Stereo systems are versatile and can perform well in various lighting conditions, making them suitable for outdoor robotics. However, they require sophisticated algorithms to handle occlusions and textureless surfaces and typically have higher computational demands.

-

Passive Monocular Depth Techniques

Emerging methods involve using a single camera with advanced optics—like meta-imaging lenses or light-field technology—to estimate depth passively without active illumination. These approaches promise smaller form factors and lower power consumption, which are beneficial for compact or battery-powered robots. Although still under development, passive monocular depth sensing could reshape how future robots perceive their environment.

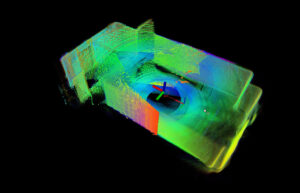

How Depth Sensing Cameras Help in 3D Mapping

3D mapping is a cornerstone capability for many advanced robotic systems, enabling machines to understand and interact with their environment in a spatially aware manner. Depth sensing cameras play a pivotal role in this process by capturing precise distance information and transforming it into detailed three-dimensional models.

Accurate Spatial Awareness

Depth-sensing cameras generate real-time depth maps by measuring the distance between the sensor and surrounding objects. This spatial data allows robots to construct 3D point clouds—dense sets of points representing surfaces and structures in the environment. These point clouds serve as the foundation for accurate 3D maps, helping robots perceive shapes, sizes, and relative positions of obstacles or targets.

Facilitating Simultaneous Localization and Mapping (SLAM)

Many robotic systems rely on SLAM algorithms to build and update maps of unknown environments while simultaneously tracking their own position. Depth-sensing cameras provide crucial 3D input to SLAM, enabling better environment modeling and improved localization accuracy. For example, MRDVS’s

RGB-D cameras deliver high-resolution depth data that enhances SLAM performance in complex industrial settings, allowing robots to navigate safely and efficiently.

Enabling Detailed Environment Reconstruction

Beyond simple navigation, depth cameras enable detailed environment reconstruction for tasks like inspection, quality control, and augmented reality applications. Robots equipped with these sensors can detect minute surface defects or changes in structure by comparing 3D maps over time.

Supporting Dynamic and Unstructured Environments

Depth-sensing cameras are especially valuable in dynamic or unstructured environments where obstacles can move or appear unpredictably. The ability to capture continuous 3D data allows robots to update maps in real time, facilitating adaptive path planning and obstacle avoidance.

Stereo vs. ToF: Which Depth Sensing Camera Wins?

When selecting a depth sensing camera for robotics or other applications, understanding the differences between

Stereo Vision and

Time-of-Flight (ToF) cameras is essential. Each technology has its strengths and weaknesses, making them better suited for different environments and tasks.

|

Feature

|

Stereo Vision Cameras

|

Time-of-Flight (ToF) Cameras

|

|

Working Principle

|

Uses two or more cameras to calculate depth from disparity between images

|

Emits infrared light pulses and measures return time to calculate depth

|

|

Performance in Sunlight

|

Performs well outdoors, unaffected by ambient light

|

Can be disrupted by strong sunlight or reflective surfaces

|

|

Depth Accuracy

|

Good accuracy but dependent on texture and contrast

|

High accuracy even in low-texture or dark environments

|

|

Range

|

Typically longer effective range

|

Usually shorter range compared to stereo

|

|

Computational Load

|

High – requires complex algorithms for depth calculation

|

Lower – provides direct depth measurement

|

|

Power Consumption

|

Generally lower, passive system

|

Higher due to active illumination

|

|

Suitability

|

Best for outdoor, textured, and large-scale environments

|

Ideal for indoor, dynamic scenes and low-light conditions

|

|

Size and Integration

|

Larger system with multiple cameras

|

More compact and easier to integrate

|

In practice, the choice between stereo and ToF cameras depends on the application’s specific needs:

-

Outdoor autonomous robots often prefer stereo vision for its robustness under natural lighting and longer range.

-

Indoor robots and fast-moving systems benefit from ToF cameras due to their fast, direct, and accurate depth sensing.

Key Robotics Applications Powered by Depth Sensing Cameras

Depth-sensing cameras have become fundamental in advancing the capabilities of modern robotics. By providing accurate three-dimensional data, they unlock a range of sophisticated applications that enable robots to operate more intelligently and efficiently across various industries.

-

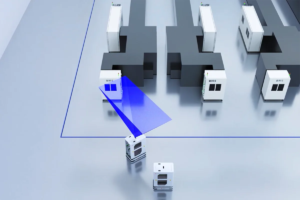

Navigation and Obstacle Avoidance

One of the most critical functions for autonomous robots is safe and efficient navigation. Depth sensing cameras, such as those developed by MRDVS, allow robots like Automated Guided Vehicles (AGVs) and Autonomous Mobile Robots (AMRs) to build real-time 3D maps of their environment. This enables precise obstacle detection and path planning, allowing robots to maneuver dynamically in crowded or complex spaces without collisions. For example, warehouse robots rely heavily on MRDVS cameras to avoid obstacles and optimize routes.

-

Object Detection and Manipulation

In industrial and logistics settings, robots need to accurately detect, identify, and manipulate objects of various shapes and sizes. Depth sensing cameras from MRDVS provide detailed spatial information that aids robotic arms in grasping, sorting, and packaging tasks. This improves efficiency and reduces errors in processes like picking items from bins or assembling parts on production lines.

-

Human-Robot Interaction and Safety

For robots working alongside humans, safety is paramount. Depth sensing cameras from MRDVS enable robots to recognize human presence and gestures, allowing for intuitive interaction and timely responses. Proximity sensing ensures robots maintain safe distances, while gesture recognition can facilitate hands-free control. This technology is especially important in service robots, collaborative manufacturing cells, and healthcare applications.

-

Autonomous Decision-Making

Smarter robots use depth data from advanced sensors like those by MRDVS to feed algorithms such as Simultaneous Localization and Mapping (SLAM) and AI-driven scene understanding. By integrating 3D spatial information, robots can make autonomous decisions in real time—from selecting the best route to identifying unknown obstacles or changing environments. This capability is crucial for robots operating in dynamic, unstructured settings like agriculture or disaster response.

How Do Depth Sensing Cameras Integrate with Computer Vision and AI?

Depth sensing cameras play a vital role in advancing robotic intelligence by providing rich 3D data that complements traditional computer vision and artificial intelligence (AI) technologies. Their integration enables robots to better understand, interpret, and interact with their surroundings in real time.

-

Enhancing Perception with RGB-D and Thermal Data Fusion

Depth sensing cameras, such as those developed by MRDVS, often work alongside RGB (color) and thermal cameras to create comprehensive multispectral views of the environment. By fusing depth data with color and thermal information, robots can improve object detection, segmentation, and recognition accuracy—even in challenging lighting or weather conditions. This multimodal fusion enables applications like surveillance, quality inspection, and environmental monitoring.

-

Camera-LiDAR Fusion for Autonomous Navigation

In advanced robotics and autonomous vehicles, combining data from depth sensing cameras with LiDAR systems helps create highly detailed 3D maps. This sensor fusion allows robots to leverage the strengths of both technologies—LiDAR’s long-range accuracy and the depth camera’s high-resolution spatial details. AI algorithms process this combined data to enhance scene understanding, obstacle detection, and path planning.

-

AI-Driven Activity and Gesture Recognition

Depth-sensing cameras enable robots to capture detailed spatial cues necessary for human activity and gesture recognition. Using AI and machine learning models trained on depth data, robots can interpret human poses, detect movements, and respond appropriately. This capability is crucial for human-robot collaboration, healthcare monitoring, and interactive entertainment.

-

Real-Time Decision Making and Edge Computing

The integration of depth-sensing cameras with AI-powered edge computing allows robots to analyze and react to their environment without relying on cloud connectivity. Companies like MRDVS design their cameras to support real-time depth data processing, enabling faster response times and improved autonomy in tasks such as navigation, inspection, and manipulation.

Start Depth Sensing Cameras with MRDVS

Depth sensing cameras are essential for building smarter, more capable robots that navigate, interact, and adapt with precision. By delivering reliable 3D perception, they unlock advanced robotics applications across industries. MRDVS offers state-of-the-art depth sensing solutions designed to tackle these challenges, helping your robots perform more efficiently and safely. Take the next step in robotics innovation—discover MRDVS’s depth sensing cameras and empower your smart robots today.