Autonomous localization technology plays a critical role in modern automation and robotics. With the constant development and increasing demand for applications, methods and technologies for autonomous localization continue to evolve. From traditional sensor-based methods to modern techniques utilizing visual and deep learning technologies, autonomous localization has become a key driver of industrial automation and intelligence.

Laser Localization Technology: Laser localization technology has been widely used in various industrial and commercial environments due to its high precision and stability. This technology primarily involves scanning the surrounding environment with lasers and calculating the robot’s position and direction based on reflected laser data. However, this approach has limitations. In dynamic environments with frequently moving people and transport equipment, laser localization can be disrupted. Moreover, in geometrically simple or repetitive environments (such as long corridors), the localization accuracy can decrease.

Visual Localization Technology: Visual localization mimics the functionality of the human eye, extracting rich texture and color information from the environment to help robots understand and recognize their surroundings. This method is particularly suitable for complex and dynamically changing environments, significantly improving localization accuracy and reliability. However, traditional 2D visual SLAM (Simultaneous Localization and Mapping) methods face challenges, such as sensitivity to lighting changes and limitations in acquiring depth information.

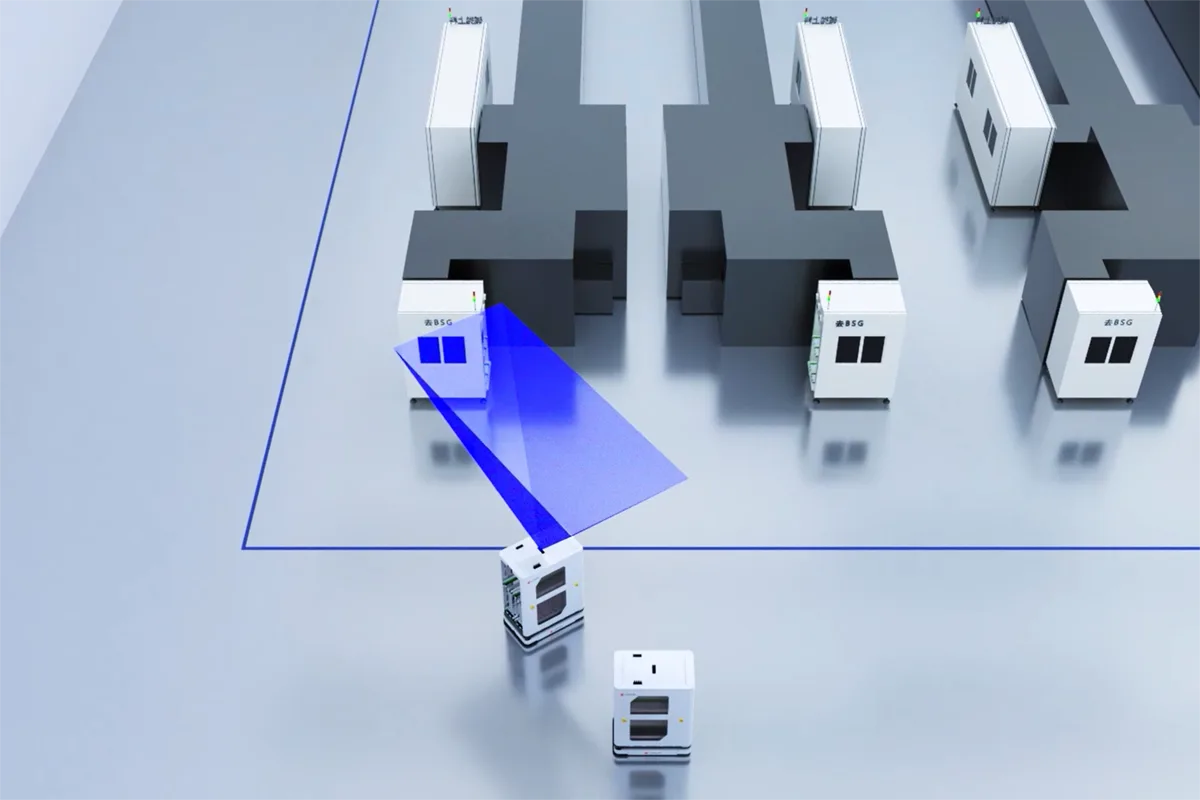

To address the challenges of traditional localization methods, the MRDVS Ceiling Visual SLAM Solution combines 2D visual data and 3D depth information using high-resolution visual sensors. By incorporating deep learning models and SAM (Semantic Augmentation Module) segmentation technology, this system provides high-precision localization and navigation in dynamic environments. The solution achieves accurate and reliable autonomous localization through high-precision recognition and tracking of key industrial scene targets, significantly enhancing indoor navigation capabilities.

Deep Learning Model Construction: The project constructs deep learning networks for industrial application scenarios, leveraging vast amounts of accumulated industrial data. By labeling important target objects in the work environment (such as people, material carts, forklifts, goods, equipment, signage, etc.), the data volume dynamically updates and accumulates over time. The trained deep learning network achieves strong generalization capabilities, combined with SAM semantic segmentation technology for precise semantic segmentation in 2D images.

Mapping Phase:

Localization Phase:

Visual Localization Module Development: The MRDVS Ceiling Visual SLAM Solution includes a visual localization module composed of high-resolution visual sensors and visual mapping localization algorithms. Testing and research have shown that the solution effectively reconstructs spatial structures, accurately reflecting the actual environment’s features without common issues like loop closure and scale drift seen in traditional visual SLAM methods.

Industrial Applications:

The MRDVS Ceiling Visual SLAM Solution represents a significant advancement in autonomous indoor navigation technology. By integrating 2D and 3D visual data with deep learning models, it offers unparalleled accuracy and reliability in dynamic environments. This innovative solution is poised to drive the future of industrial automation, providing robust and scalable navigation capabilities for various applications.

Click to learn more about the solution: CV-SLAM: Advanced Localization and Mapping Solutions (mrdvs.com)

©Copyright 2023 Zhejiang MRDVS Technology Co.,Ltd.

浙ICP备2023021387号-1