In the realm of mobile robotics, Visual Odometry (VO) and Visual SLAM are pivotal for enabling autonomous navigation. These technologies allow robots to estimate their position and map their environment using visual data from cameras. This overview delves into the fundamentals, methodologies, and applications of VO and V-SLAM, drawing insights from various research studies and implementations.

Visual Odometry involves the process of estimating a robot’s motion by analyzing sequential camera images. It incrementally computes the robot’s trajectory by determining the displacement between consecutive frames. This technique is akin to wheel odometry but relies on visual data, offering advantages in environments where wheel slip or lack of traction can lead to inaccuracies in traditional odometry methods.

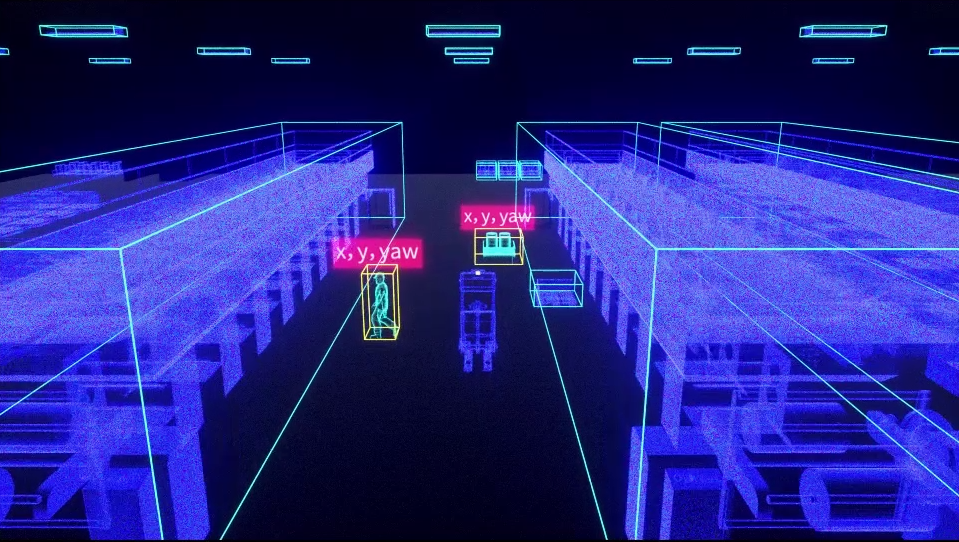

V-SLAM extends the concept of VO by simultaneously constructing a map of the environment and localizing the robot within this map. It handles the problem of drift by integrating loop closure techniques, which detect when the robot revisits a previously mapped area and corrects any accumulated errors in the map and trajectory estimates.

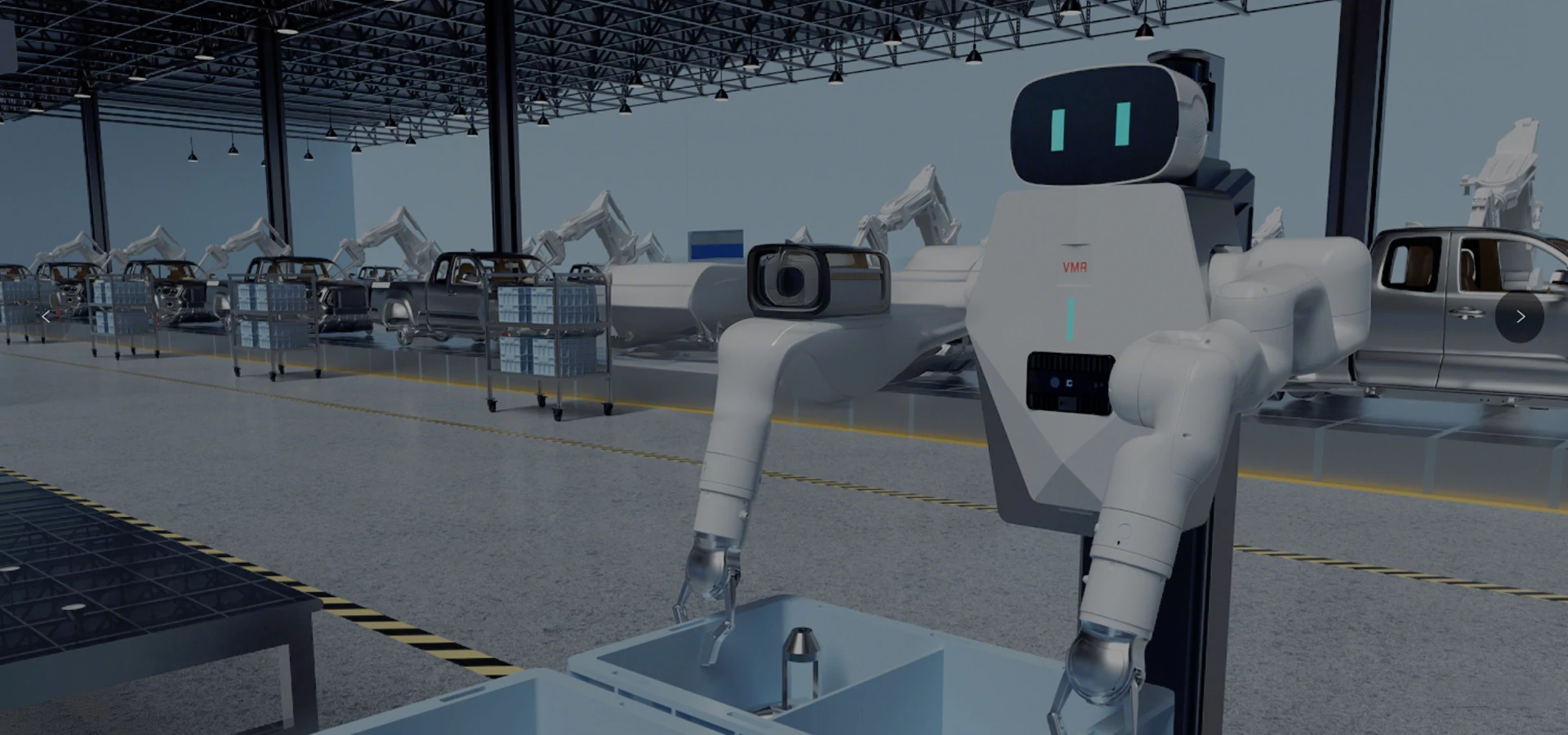

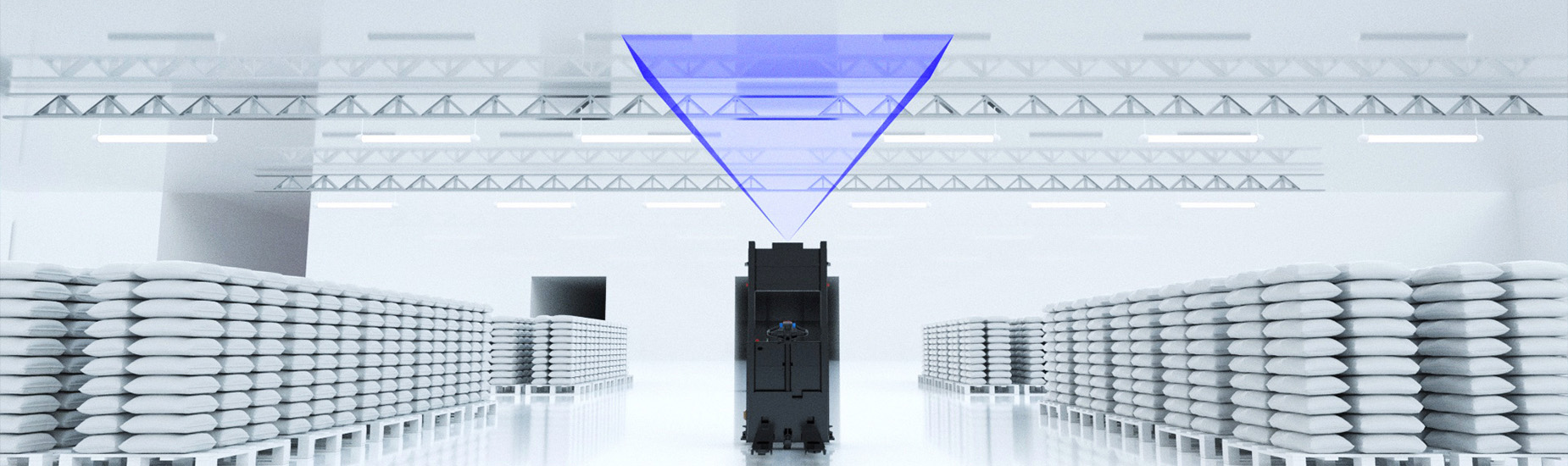

MRDVS Ceiling Visual SLAM Solution offers an approach for indoor navigation. By utilizing a ceiling-facing camera system, MRDVS provides unparalleled stability and accuracy in mapping and localization. This innovative solution is designed to handle dynamic environments and seamlessly integrate with multi-robot systems, making it ideal for industrial automation, warehouse management, and beyond.

With MRDVS, robots can efficiently navigate large, complex indoor spaces by leveraging the consistent features found on ceilings, such as lights and vents. The system’s robust data association techniques and advanced optimization algorithms ensure precise map construction and reliable localization, even in challenging conditions.

Experience the future of indoor navigation with MRDVS Ceiling Visual SLAM Solution, and unlock new levels of efficiency and autonomy for your robotic applications.

©Copyright 2023 Zhejiang MRDVS Technology Co.,Ltd.

浙ICP备2023021387号-1