Autonomous mobile robots play a crucial role in various applications, from industrial automation to service robotics. Ensuring safety while navigating complex environments is a significant challenge for these robots. The importance of industrial robot safety cannot be overstated, as failure to navigate safely can lead to accidents, inefficiencies, and increased operational costs. Traditional 2D navigation methods, while effective in certain scenarios, fall short when it comes to the complexities of real-world, three-dimensional environments. These limitations often result in navigation safety issues, especially when robots encounter unexpected obstacles.

Challenges in Traditional 2D Navigation

Traditional 2D navigation systems rely heavily on planar representations of the environment, which can be adequate in controlled, flat settings. However, real-world environments are rarely so simple. They often contain various obstacles at different heights, uneven terrain, and dynamic elements that 2D systems cannot effectively manage. This inability to perceive and process the full three-dimensional aspect of the environment makes it difficult for robots to avoid obstacles accurately, leading to potential collisions and navigation errors.

Innovative Solutions with RGB-D Multimodal Information

To address these challenges, the integration of RGB-D cameras and multimodal data fusion is essential. This approach leverages the rich color (RGB) and depth (D) information captured by RGB-D cameras, providing a comprehensive view of the environment. By combining these data types, mobile robots can achieve superior semantic recognition of objects, leading to more effective obstacle avoidance and enhanced navigation capabilities, ultimately improving industrial robot safety.

1. Comprehensive 3D Environment Representation

The proposed method begins with an advanced environment representation using RGB-D cameras. These cameras capture both color and depth information, providing a detailed 3D view of the surroundings. This data is then processed through a point cloud filtering pipeline and a cost map generator to create a local cost map, which the robot uses to better understand its environment and enhance safety.

2. Multimodal Data Fusion

Multimodal data fusion involves integrating the RGB and depth data to enhance the robot’s perception and decision-making capabilities. By combining the visual richness of RGB images with the spatial details of depth data, robots can identify and classify objects more accurately, improving their ability to navigate complex environments safely.

3. Enhancing Obstacle Avoidance with Object Semantic Recognition

Object semantic recognition involves identifying and classifying objects within the robot’s environment. By understanding what objects are and where they are located, mobile robots can make informed decisions to navigate around obstacles. The integration of RGB-D cameras and multimodal data fusion provides several benefits:

A typical approach to implementing multimodal data fusion for obstacle avoidance involves:

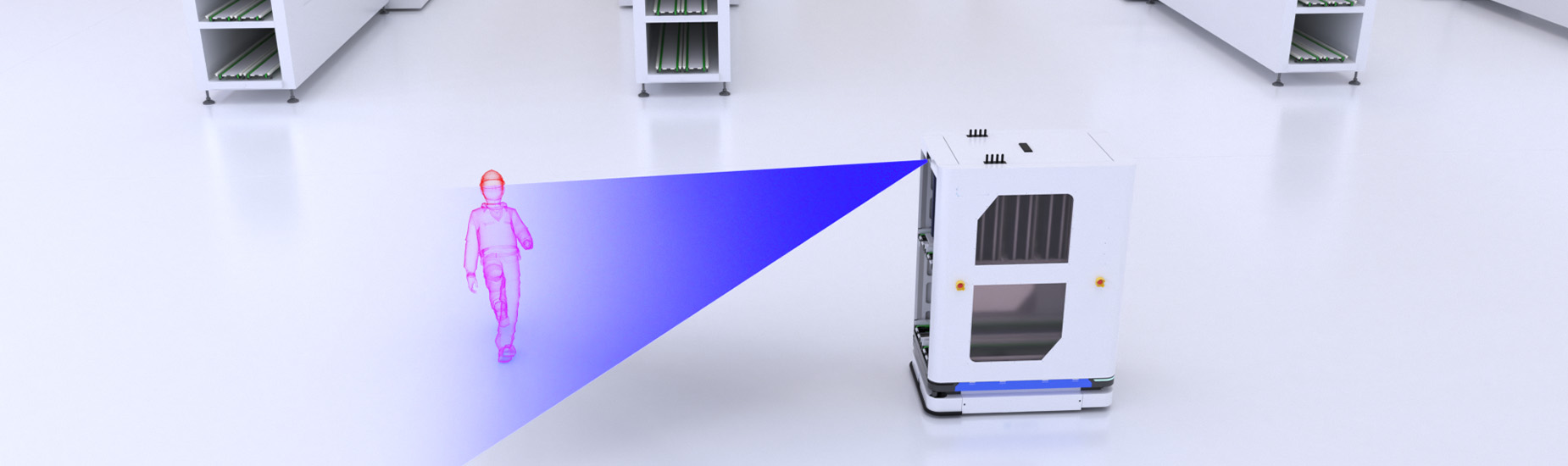

Consider a mobile robot operating in a dynamic environment, such as a busy warehouse. The RGB-D camera captures the color and depth data, which the multimodal data fusion model processes to recognize objects like boxes, shelves, and human workers. As the robot moves, it continuously updates its understanding of the environment, detecting and avoiding obstacles in real-time, ensuring efficient and safe navigation.

The integration of RGB-D cameras and multimodal data fusion significantly enhances the obstacle avoidance capabilities of mobile robots, thus improving industrial robot safety. By combining visual and spatial information, these technologies enable robots to navigate complex environments with higher accuracy and efficiency. As mobile robots continue to evolve, the adoption of these advanced recognition and avoidance systems will play a crucial role in their operational success and safety.

Obstacle Avoidance with Advanced Vision Systems: The MRDVS S Series

©Copyright 2023 Zhejiang MRDVS Technology Co.,Ltd.